I’m working on an exciting new project at the moment. The main UI element is a management console built with AngularJS that communicates with a HTTP/JSON API built with NancyFX and hosted using the KatanaOWIN self host. I’m quite new to this software stack, having spent the last three years buried in SOA and messaging, but so far it’s all been a joy to work with. AngularJS makes building single page applications so easy, even for a newbie like me, that it almost feels unfair. I love the dependency injection, templating and model binding, and the speed with which you can get up and running. On the server side, NancyFx is perfect for building HTTP/JSON APIs. I really like the design philosophy behind it. The built-in dependency injection, component oriented design, and convention-over-configuration, for example, is exactly how I like build software. OWIN is a huge breakthrough for C# web applications. Decoupling the web server from the web framework is something that should have happened a long time ago, and it’s really nice to finally say goodbye to ASP.NET.

Rather than using cookie based authentication, I’ve decided to go with JSON Web Tokens (JWT). This is a relatively new authorization standard that uses a signed token, transmitted in a request header, rather than the traditional ASP.NET cookie based authorization.

There are quite a few advantages to JWT:

- Cross Domain API calls. Because it’s just a header rather than a cookie, you don’t have any of the cross-domain browser problems that you get with cookies. It makes implementing single-sign-on much easier because the app that issues the token doesn’t need to be in any way connected with the app that consumes it. They merely need to have access to the same shared secret encryption key.

- No server affinity. Because the token contains all the necessary user identification, there’s no for shared server state – a call to a database or shared session store.

- Simple to implement clients. It’s easy to consume the API from other servers, or mobile apps.

So how does it work? The JWT token is a simple string of three ‘.’ separated base 64 encoded values:

<header>.<payload>.<hash>

Here’s an example:

eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJ1c2VyIjoibWlrZSIsImV4cCI6MTIzNDU2Nzg5fQ.KG-ds05HT7kK8uGZcRemhnw3er_9brQSF1yB2xAwc_E

The header and payload are simple JSON strings. In the example above the header looks like this:

{ "typ": "JWT", "alg": "HMACSHA256" }This is defined in the JWT standard. The ‘typ’ is always ‘JWT’, and the ‘alg’ is the hash algorithm used to sign the token (more on this later).

The payload can be any valid JSON, although the standard does define some keys that client and server libraries should respect:

{

"user": "mike",

"exp": 123456789

}Here, ‘user’ is a key that I’ve defined, ‘exp’ is defined by the standard and is the expiration time of the token given as a UNIX time value. Being able to pass around any values that are useful to your application is a great benefit, although you obviously don’t want the token to get too large.

The payload is not encrypted, so you shouldn’t put sensitive information it in. The standard does provide an option for encrypting the JWT inside an encrypted wrapper, but for most applications that’s not necessary. In my case, an attacker could get the user of a session and the expiration time, but they wouldn’t be able to generate new tokens without the server side shared-secret.

The token is signed by taking the header and payload, base 64 encoding them, concatenating with ‘.’ and then generating a hash value using the given algorithm. The resulting byte array is also base 64 encoded and concatenated to produce the complete token. Here’s some code (taken from John Sheehan’s JWT project on GitHub) that generates a token. As you can see, it’s not at all complicated:

/// <summary>

/// Creates a JWT given a payload, the signing key, and the algorithm to use.

/// </summary>

/// <param name="payload">An arbitrary payload (must be serializable to JSON via <see cref="System.Web.Script.Serialization.JavaScriptSerializer"/>).</param>

/// <param name="key">The key bytes used to sign the token.</param>

/// <param name="algorithm">The hash algorithm to use.</param>

/// <returns>The generated JWT.</returns>

publicstaticstring Encode(object payload, byte[] key, JwtHashAlgorithm algorithm)

{

var segments = new List<string>();

var header = new { typ = "JWT", alg = algorithm.ToString() };

byte[] headerBytes = Encoding.UTF8.GetBytes(jsonSerializer.Serialize(header));

byte[] payloadBytes = Encoding.UTF8.GetBytes(jsonSerializer.Serialize(payload));

segments.Add(Base64UrlEncode(headerBytes));

segments.Add(Base64UrlEncode(payloadBytes));

var stringToSign = string.Join(".", segments.ToArray());

var bytesToSign = Encoding.UTF8.GetBytes(stringToSign);

byte[] signature = HashAlgorithms[algorithm](key, bytesToSign);

segments.Add(Base64UrlEncode(signature));

returnstring.Join(".", segments.ToArray());

}

Implementing JWT authentication and authorization in NancyFx and AngularJS

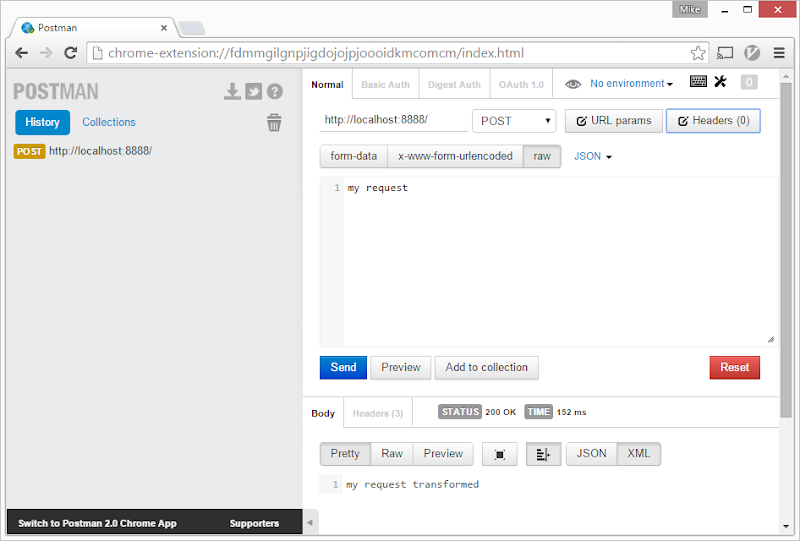

There are two parts to this: first we need a login API, that takes a username (email in my case) and a password and returns a token, and secondly we need a piece of OWIN middleware that intercepts each request and checks that it has a valid token.

The login Nancy module is pretty straightforward. I took John Sheehan’s code and pasted it straight into my project with a few tweaks, so it was just a question of taking the email and password from the request, validating them against my user store, generating a token and returning it as the response. If the email/password doesn’t validate, I just return 401:

using System;

using System.Collections.Generic;

using Nancy;

using Nancy.ModelBinding;

using MyApp.Api.Authorization;

namespace MyApp.Api

{

publicclass LoginModule : NancyModule

{

privatereadonlystring secretKey;

privatereadonly IUserService userService;

public LoginModule (IUserService userService)

{

Preconditions.CheckNotNull (userService, "userService");

this.userService = userService;

Post ["/login/"] = _ => LoginHandler(this.Bind<LoginRequest>());

secretKey = System.Configuration.ConfigurationManager.AppSettings ["SecretKey"];

}

public dynamic LoginHandler(LoginRequest loginRequest)

{

if (userService.IsValidUser (loginRequest.email, loginRequest.password)) {

var payload = new Dictionary<string, object> {

{ "email", loginRequest.email },

{ "userId", 101 }

};

var token = JsonWebToken.Encode (payload, secretKey, JwtHashAlgorithm.HS256);

returnnew JwtToken { Token = token };

} else {

return HttpStatusCode.Unauthorized;

}

}

}

publicclass JwtToken

{

publicstring Token { get; set; }

}

publicclass LoginRequest

{

publicstring email { get; set; }

publicstring password { get; set; }

}

}

On the AngularJS side, I have a controller that calls the LoginModule API. If the request is successful, it stores the token in the browser’s sessionStorage, it also decodes and stores the payload information in sessionStorage. To update the rest of the application, and allow other components to change state to show a logged in user, it sends an event (via $rootScope.$emit) and then redirects to the application’s root path. If the login request fails, it simply shows a message to inform the user:

myAppControllers.controller('LoginController', function ($scope, $http, $window, $location, $rootScope) {

$scope.message = '';

$scope.user = { email: '', password: '' };

$scope.submit = function () {

$http

.post('/api/login', $scope.user)

.success(function (data, status, headers, config) {

$window.sessionStorage.token = data.token;

var user = angular.fromJson($window.atob(data.token.split('.')[1]));

$window.sessionStorage.email = user.email;

$window.sessionStorage.userId = user.userId;

$rootScope.$emit("LoginController.login");

$location.path('/');

})

.error(function (data, status, headers, config) {

// Erase the token if the user fails to login

delete $window.sessionStorage.token;

$scope.message = 'Error: Invalid email or password';

});

};

});Now that we have the JWT token stored in the browser’s sessionStorage, we can use it to ‘sign’ each outgoing API request. To do this we create an interceptor for Angular’s http module. This does two things: on the outbound request it adds an Authorization header ‘Bearer <token>’ if the token is present. This will be decoded by our OWIN middleware to authorize each request. The interceptor also checks the response. If there’s a 401 (unauthorized) response, it simply bumps the user back to the login screen.

myApp.factory('authInterceptor', function ($rootScope, $q, $window, $location) {

return {

request: function (config) {

config.headers = config.headers || {};

if($window.sessionStorage.token) {

config.headers.Authorization = 'Bearer ' + $window.sessionStorage.token;

}

return config;

},

responseError: function (response) {

if(response.status === 401) {

$location.path('/login');

}

return $q.reject(response);

}

};

});

myApp.config(function ($httpProvider) {

$httpProvider.interceptors.push('authInterceptor');

});The final piece is the OWIN middleware that intercepts each request to the API and validates the JWT token.

We want some parts of the API to be accessible without authorization, such as the login request and the API root, so we maintain a list of exceptions, currently this is just hard-coded, but it could be pulled from some configuration store. When the request comes in, we first check if the path matches any of the exception list items. If it doesn’t we check for the presence of an authorization token. If the token is not present, we cancel the request processing (by not calling the next AppFunc), and return a 401 status code. If we find a JWT token, we attempt to decode it. If the decode fails, we again cancel the request and return 401. If it succeeds, we add some OWIN keys for the ‘userId’ and ‘email’, so that they will be accessible to the rest of the application and allow processing to continue by running the next AppFunc.

using System;

using System.Collections.Generic;

using System.Threading.Tasks;

namespace MyApp.Api.Authorization

{

using AppFunc = Func<IDictionary<string, object>, Task>;

/// <summary>

/// OWIN add-in module for JWT authorization.

/// </summary>

publicclass JwtOwinAuth

{

privatereadonly AppFunc next;

privatereadonlystring secretKey;

privatereadonly HashSet<string> exceptions = new HashSet<string>{

"/",

"/login",

"/login/"

};

public JwtOwinAuth (AppFunc next)

{

this.next = next;

secretKey = System.Configuration.ConfigurationManager.AppSettings ["SecretKey"];

}

public Task Invoke(IDictionary<string, object> environment)

{

var path = environment ["owin.RequestPath"] asstring;

if (path == null) {

thrownew ApplicationException ("Invalid OWIN request. Expected owin.RequestPath, but not present.");

}

if (!exceptions.Contains(path)) {

var headers = environment ["owin.RequestHeaders"] as IDictionary<string, string[]>;

if (headers == null) {

thrownew ApplicationException ("Invalid OWIN request. Expected owin.RequestHeaders to be an IDictionary<string, string[]>.");

}

if (headers.ContainsKey ("Authorization")) {

var token = GetTokenFromAuthorizationHeader (headers ["Authorization"]);

try {

var payload = JsonWebToken.DecodeToObject (token, secretKey) as Dictionary<string, object>;

environment.Add("myapp.userId", (int)payload["userId"]);

environment.Add("myapp.email", payload["email"].ToString());

} catch (SignatureVerificationException) {

return UnauthorizedResponse (environment);

}

} else {

return UnauthorizedResponse (environment);

}

}

return next (environment);

}

publicstring GetTokenFromAuthorizationHeader(string[] authorizationHeader)

{

if (authorizationHeader.Length == 0) {

thrownew ApplicationException ("Invalid authorization header. It must have at least one element");

}

var token = authorizationHeader [0].Split (' ') [1];

return token;

}

public Task UnauthorizedResponse(IDictionary<string, object> environment)

{

environment ["owin.ResponseStatusCode"] = 401;

return Task.FromResult (0);

}

}

}

So far this is all working very nicely. There are some important missing pieces. I haven’t implemented an expiry key in the JWT token, or expiration checking in the OWIN middleware. When the token expires, it would be nice if there was some algorithm that decides whether to simply issue a new token, or whether to require the user to sign-in again. Security dictates that tokens should expire relatively frequently, but we don’t want to inconvenience the user by asking them to constantly sign in.

JWT is a really nice way of authenticating HTTP/JSON web APIs. It’s definitely worth looking at if you’re building single page applications, or any API-first software.