I’ve long been confused with best practices around AMQP channel handling. This post is my attempt to explain my current thoughts, partly in an attempt to elicit feedback.

The conversation between a message broker and a client is two-way. Both the client and the server can initiate ‘communication events’; the client can invoke a method on the server: ‘publish’ or ‘declare’, for example; and the server can invoke a method on the client such as ‘deliver’ or ‘reject’. Because the client needs to receive methods from the server, it needs to keep a connection open for its lifetime. This means that broker connections may last long periods; hours, days, or weeks. Maintaining these connections is expensive, both for the client and the server. In order to have many logical connections without the overhead of many physical TCP/IP connections, AMQP has the concept of ‘channel’ (confusingly represented by the IModel interface in the Java and .NET clients). You can create multiple channels on a single connection and it’s relatively cheap to create and dispose of them.

But what are the recommended rules for handling these channels? Should I create a new one for every operation? Or should I just keep a single one around and execute every method on it?

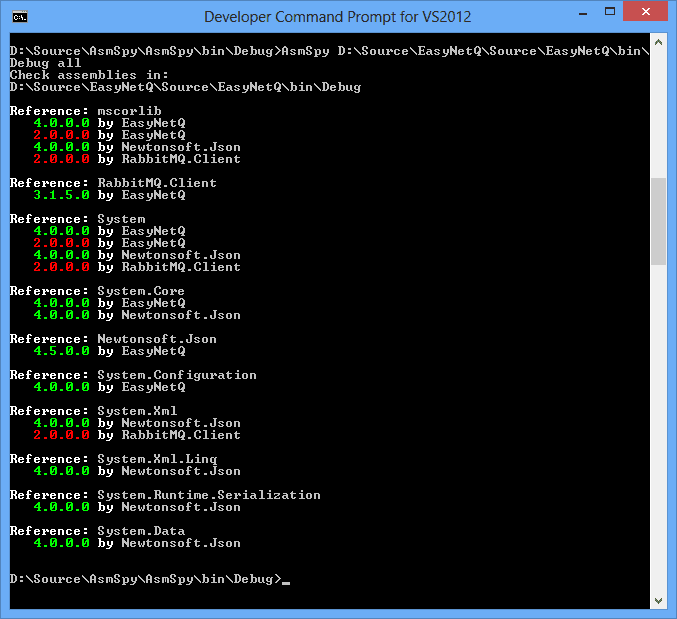

First some hard and fast rules (note I’m using the RabbitMQ .NET client for reference here, other clients might have different behaviour):

Channels are not thread safe. You should create and use a channel on a single thread. From the .NET client documentation (section 2.10):

“In general, IModel instances should not be used by more than one thread simultaneously: application code should maintain a clear notion of thread ownership for IModel instances.”

Apparently this is not a hard and fast rule with the Java client, just good advice. The java client serializes all calls to a channel.

An ACK should be invoked on the same channel on which the delivery was received. The delivery tag is scoped to the channel and an ACK sent to a different channel from which the delivery was received will cause a channel error. This somewhat contradicts the ‘Channels are not thread safe’ directive above since you will probably ACK on a different thread from one where you invoked basic.consume, but this seems to be acceptable.

Now some softer suggestions:

It seems neater to create a channel for each consumer. I like thinking of the consumer and channel as a unit: when I create a consumer, I also create a channel for it to consume from. When the consumer goes away, so does the channel and vice-versa.

Do not mix publishing and consuming on the same channel. If you follow the suggestion above, it implies that you have dedicated channels for each consumer. It follows that you should create separate channels for server and client originated methods.

Maintain a long running publish channel. My current implementation of EasyNetQ makes creating channels for publishing the responsibility of the user. I now think this is mistake. It encourages the pattern of: create a channel, publish, dispose the channel. This pattern doesn’t work with publisher confirms where you need to keep the channel open at least until you receive an ACK or NACK. And although creating channels is relatively lightweight, there is still some overhead. I now favour the approach of maintaining a single publish channel on a dedicated thread and marshalling publish (and declare) calls to it. This is potentially a bottleneck, but the impressive performance of non-transactional publishing means that I’m not overly concerned about it.